The longer you talk to your AI, the more it starts thinking like you on a bad day, distracted, overloaded, and unfocused.

At first, it’s brilliant. Every response hits the mark.

Whether it’s ChatGPT, Claude, Gemini or Perplexity, the insights are sharp, the tone perfect, the rhythm flawless.

Every answer lands like it read your mind.

Then it happens.

You ask a new question and it replies with something… off.

It repeats itself.

It forgets what you said two minutes ago.

It gives safe, obvious, corporate-sounding nonsense.

You try rephrasing the prompt. You remind it of what you meant. You even copy and paste your earlier instructions again.

But somehow, everything you get back feels blander, slower and less accurate.

It is like the model got tired or worse, lazy.

But here is the uncomfortable truth. The AI did not get worse.

You buried it alive in its own memory.

You are experiencing what is called context rot.

You know it has crept in when:

- The AI stops writing like you and starts writing like anyone

- Its summaries sound robotic or off-topic

- It contradicts things it said earlier

- It misses simple connections it nailed before

- You start wasting time clarifying, correcting and repeating yourself

It feels like your once brilliant AI has turned into a distracted intern.

And the worst part is, it is your own instructions that caused it.

If you don’t learn how to control it, context rot will quietly drain your focus and productivity every single day.

What Is Context Rot?

Context rot happens when your AI’s working memory known as the context window gets clogged with too much conflicting, irrelevant or outdated information.

Think of it as the digital version of mental overload.

At the start of a chat, the model is laser-focused on your task.

But after dozens of messages, it is also trying to remember:

- The first instructions you gave

- The 10-page document you pasted halfway through

- The changes you made later

- The examples you corrected

- And all the small side questions along the way

At that point, it is no longer reasoning clearly. It is juggling chaos.

The signal-to-noise ratio has collapsed.

And because large language models are designed to take every token seriously, they do not ignore the junk they try to reconcile it all.

That is how clarity turns to confusion.

Why It Happens: The Research Behind the Problem

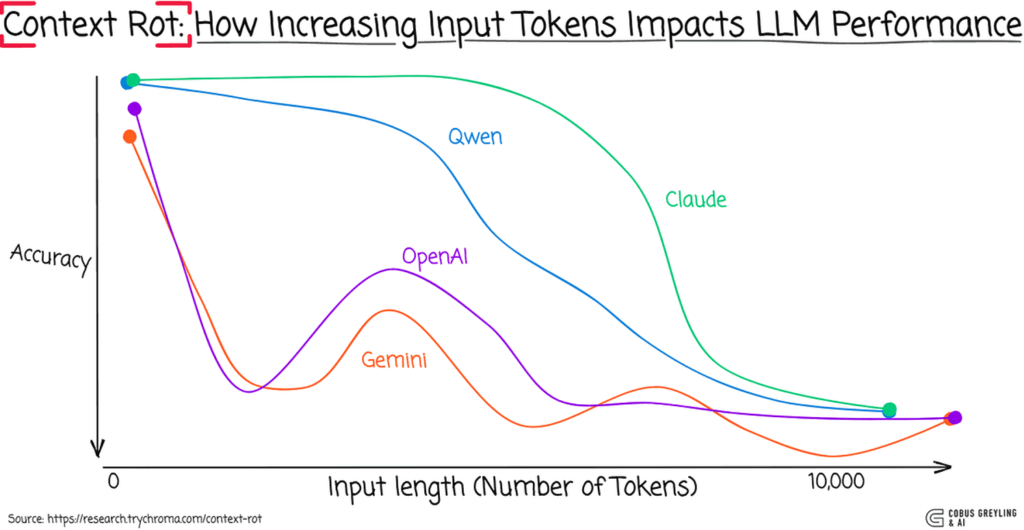

A 2025 study by Chroma Research tested 18 large language models, including GPT-4.1 Claude 4 and Gemini 2.5, to see how they handle long inputs.

The findings were clear.

As context length grew, performance dropped even when the added text was irrelevant.

In other words, the more the models “remembered”, the worse they performed.

The research found that:

- Adding distractor content, even on the same topic, reduced accuracy significantly

- Models struggled more when similar ideas overlapped, a phenomenon called semantic interference

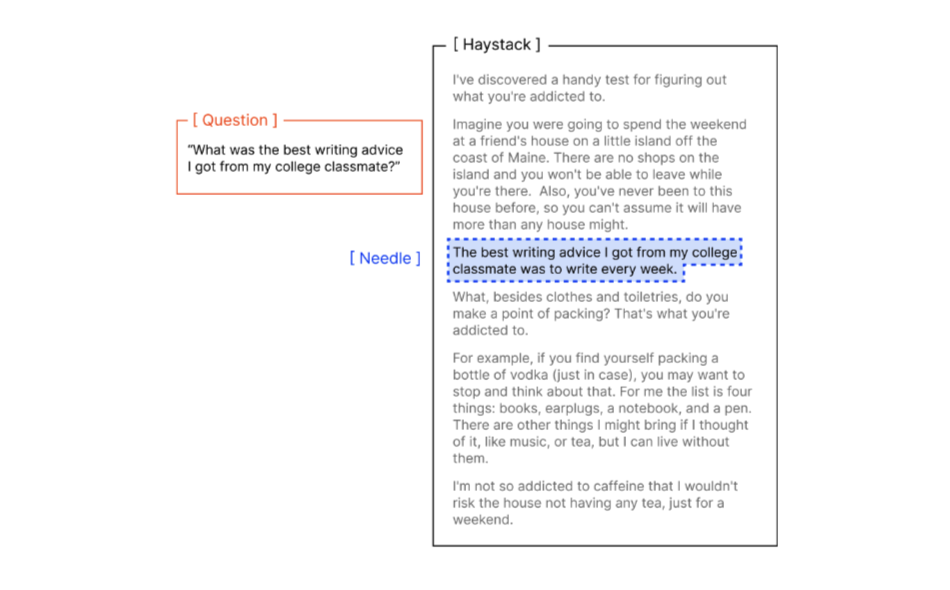

To prove this, researchers used what’s called a needle in a haystack test a diagnostic.

The idea is simple.

Hide a single relevant fact (the needle) is placed in a long document of unrelated text (the haystack), then ask the model to retrieve it.

What happens?

Even the best large language start missing the needle once the haystack grows beyond a few thousand tokens.

Accuracy collapses not because the information isn’t there, but because the model gets lost in its own context.

It’s the perfect metaphor for context rot:

The longer your conversation, the harder it becomes for the model to find what still matters.

The conclusion was blunt.

Bigger memory does not mean better results.

More context often means more confusion if it is not relevant.

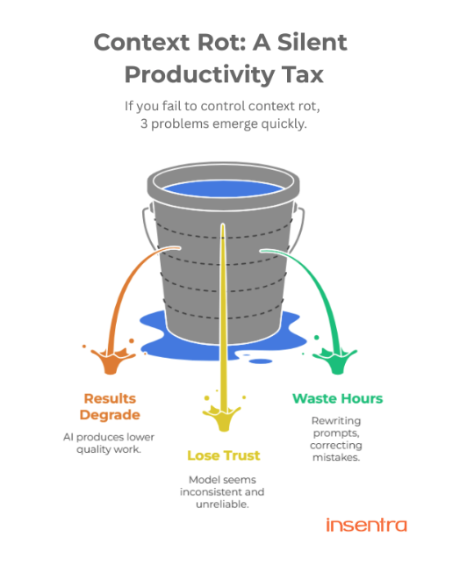

What Happens If You Ignore It

|

If you do not manage context rot, three things happen fast

That is how context rot becomes a silent tax on productivity, creativity and clarity. |

How to Minimise Context Rot (and Keep AI Thinking Clearly)

Here are six strategies that stop your AI from decaying mid-project.

- Be Ruthlessly Selective with Inputs

Do not dump everything into the model.

Feed it only what it needs to do the job.

Bad:

“Here’s our 5,000-word Q4 report. What are the key takeaways?”

Better:

“Here’s the 200-word sales section. What’s our biggest opportunity?”

If you would not hand a colleague a filing cabinet to answer one question, do not hand it to your AI.

- Bracket Your Non-Negotiables

If something is crucial, repeat it at the start and end of your prompt.

Example:

“Write this for a VP audience, strategic and free of jargon. [Details here]

Remember: VP audience, strategic and jargon-free.”

Models prioritise beginnings and endings. Reinforce what matters most to avoid style drift or forgetfulness. - Structure Prompts Like a Brief

AI thrives on structure. Use clear formatting to communicate context when prompting.

TASK: Create three LinkedIn post ideas

AUDIENCE: IT Managers

TONE: Professional and authoritative

FORMAT: Hook + body + call to action

CONSTRAINT: Under 150 words each

You are not over-explaining. You are designing clarity. - Use the Two-Step Extraction Method

When working with long documents, split the process into two stages.

Step 1: “Summarise this report into five bullet points.”

Step 2: “Based on those five points, what strategy should we use?”

This keeps the model’s mental workspace clean and focused.

It is the equivalent of pre-digesting information before analysis. - Reset Context Like You Reset Your Router

When switching topics or noticing lower quality, start a new chat.

Each thread collects old assumptions and tone. Starting fresh clears the slate.

If you need continuity, paste the final output into a new chat rather than dragging the history along. - Ask for Citations

Always request: “Cite your sources for each claim.”

It keeps answers grounded in facts instead of fuzzy guesses from earlier turns.

You will spot errors faster and see when the model leans on outdated context.

The Needle

Most people think prompt engineering is about crafting clever phrases.

It’s not. It’s about structuring information designing how knowledge flows into the model’s mind.

You’re not chatting with AI. You’re architecting its thinking space.

Your job is to:

- Keep the brief sharp and specific

- Remove irrelevant or outdated data

- Reset the chat when context shifts

- Reinforce key requirements

When you do, the model performs like a world-class assistant.

When you don’t, it decays into a wordy mess.

The Real Intelligence Is in the Brief

Context rot is not a software flaw. It is a management flaw a reflection of how we handle information, not how the model processes it.

Your AI is not losing intelligence. It is drowning in detail.

It is doing exactly what you asked, it just cannot tell what still matters.

If you want sharper thinking, give it sharper inputs.

If you want consistency, give it structure.

If you want creativity, clear away the clutter.

Clean context creates clear reasoning.

And clear reasoning drives reliable intelligence whether it comes from a human or a machine.

So, the next time your AI starts sounding dull or confused, do not assume it has lost its edge.

It has simply lost its focus.

Your job is to bring that focus back one clean, disciplined brief at a time.

Join the Insentra Generative AI Sprint and learn how to stop context rot before it derails your next prompt.