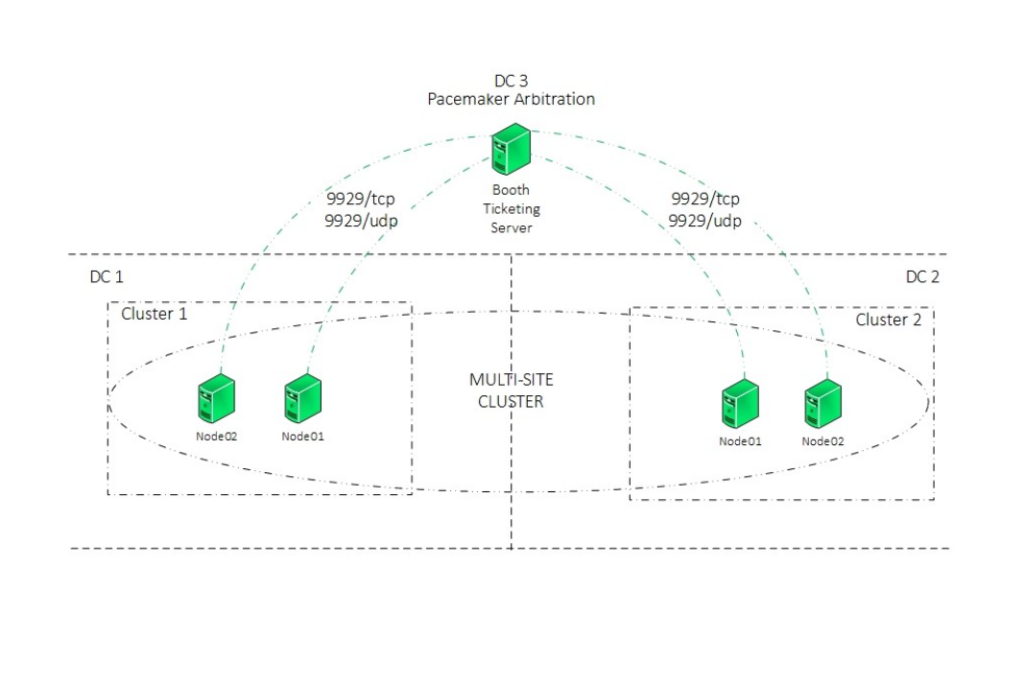

When a cluster spans more than one site (e.g. multi-site topology), issues with network connectivity between the sites can lead to split-brain situations. When connectivity drops, there is no way for a node on one site to determine whether a node on another site has failed or is still functioning with a failed site interlink.

Additionally, it can be problematic to provide high availability services across two sites which are too far apart to keep synchronous. To address these issues, Pacemaker provides support for the cluster arbitration to protect clusters in multi-site topology using a Booth cluster ticket manager.

What is a Booth Cluster Ticket Manager?

The Booth ticket manager is a distributed service that is meant to be run on a different physical network than the networks that connect the cluster nodes at particular sites. It yields another loose cluster, a Booth formation, that sits on top of the regular clusters at the sites. This aggregated communication layer facilitates consensus-based decision processes for individual Booth tickets.

A Booth ticket is a singleton in the Booth formation and represents a time-sensitive, movable unit of authorisation. Resources can be configured to require a certain ticket to run. This can ensure that resources are run at only one site at a time, for which a ticket or tickets have been granted.

Failover and Fallback Scenarios for Multi-Site Cluster

When the primary site goes down due to a network issue or disaster, the arbitrator node located in DC3 will revoke the ticket from DC1 cluster and grant the ticket to the cluster located in DC2.

At this stage, the operator/application admin can decide to bring the resource groups online in DC2. If the resource groups have been brought online in DC2, and the primary site comes back online (DC1), the resource groups in DC2 will remain active. To failback the application back to DC1, Administrator intervention will be required.

Booth Configuration

The following section describes booth configuration. Note that I am not going into details of creating the clusters, resources and resource groups.

- Use the following command to install pacemaker and booth on the cluster nodes:

# dnf install pcs fence-agents booth-site - Use the following command to install pacemaker and booth on the booth arbitrator node:

# dnf install pcs booth-core booth-arbitrator - Ensure to configure the firewalld on all the nodes

# firewall-cmd –add-service=high-availability –permanent

# firewall-cmd --reload - Set up a Booth configuration on a single, dedicated node. Ensure that the IP addresses provided for each cluster and the arbitrator are unique. Each cluster will use the allocated IP address as a floating IP address within a Booth resource group. Run the following command on node1.cluster1. Executing this command will generate the configuration files required for the booth manager /etc/booth/booth.conf and /etc/booth/booth.key on the node where the command is executed.

[node1.cluster1 ~]# pcs booth setup sites <Floating IP of cluster1> <Floating IP of cluster2> arbitrators <Arbitrator IP> - Create a ticket for the Booth configuration on the first node of the first cluster (node1.cluster1). This is the ticket you will use to define the resource constraint which will allow resources to run only when this ticket has been granted to the cluster. This basic failover configuration procedure uses only one ticket, but you can create additional tickets for more complicated scenarios where each ticket is associated with a different resource or resources.

[node1.cluster1 ~] # pcs booth ticket add ticket-name - Authenticate the booth arbitrator node to the first node of the cluster (Note: The pacemaker and booth must be installed on a booth node and cluster nodes). Authentication is necessary to pull the Booth configuration to the arbitrator.

[arbitrator-node ~] # pcs host auth node1.cluster1

[arbitrator-node ~] # pcs booth pull node1.cluster1 - Pull the Booth configuration to the other cluster and synchronise to all the nodes of that cluster. Remember to authenticate pcs to the node from which we are pulling the configuration

[node1.cluster2 ~]# pcs host auth node1.cluster1

[node1.cluster2 ~]# pcs booth pull node1.cluster1

[node1.cluster2 ~]# pcs booth sync - Start and enable booth service on the arbitrator node

[arbitrator-node ~] # pcs booth start

[arbitrator-node ~] # pcs booth enable

[arbitrator-node ~] # pcs booth status- Continue with the boot configuration, configuring the booth as a cluster resource. This process requires the Floating IP assigned to each cluster. The following commands will create a resource group with booth-ip and booth-service as member of the group:

[node1.cluster1 ~] # pcs booth create ip <Floating IP cluster1>

[node1.cluster2 ~] # pcs booth create ip <Floating IP cluster2> - Add a ticket constraint to the resource groups defined for each cluster

[node1.cluster1 ~] # pcs constraint ticket add ticket-name app_rg

[node1.cluster2 ~] # pcs constraint ticket add ticket-name app_rg- To activate the Resource Group at a specific location, grant a ticket for that location

[node1.cluster1 ~] # pcs booth ticket grant ticket-name - Verify the booth status on the booth arbitrator node

[arbitrator-node ~] # pcs booth status

TICKETS:

ticket: ticket-name, leader: 172.25.250.50, expires: 2024-05-22 00:50:21

PEERS:

site 172.25.250.50, last recv: 2024-05-22 00:40:22

Sent pkts:93 error:0 resends:0

Recv pkts:92 error:0 authfail:0 invalid:0

site 172.25.250.60, last recv: 2024-05-21 20:59:47

Sent pkts:2 error:0 resends:0

Recv pkts:1 error:0 authfail:0 invalid:0 We hope this guide helped you understand how the Booth ticket manager works and how to configure it. If you’d like to learn more about Pacemaker, check out my other blogs or reach out to Insentra.