So, you’ve got booth clusters—a lot of them. And you’ve got one trusty arbitrator node. Now, you’re wondering, “Can this one arbitrator manage multiple booth processes, like a bouncer handling entry at multiple nightclub doors?” The answer: absolutely, but it’s going to require some fancy footwork.

Imagine your arbitrator node as a skilled bartender in a bustling pub, serving drinks (read: tickets) to multiple patrons (read: clusters) at the same time. Just like a bartender who can mix a cocktail while keeping an eye on the crowd, your arbitrator node can run multiple instances of the boothd process, ensuring that each set of clusters gets their tickets handled with precision and care. The question isn’t whether it can be done—it’s whether your arbitrator is ready for the happy hour rush!

A few things to remember: In a default configuration, the boothd process manages all the clusters as a single cluster that connect to it. In order for an arbitrator node to manage only a subset of all clusters, the arbitrator node should be running multiple instances of booth.

Let’s get into this.

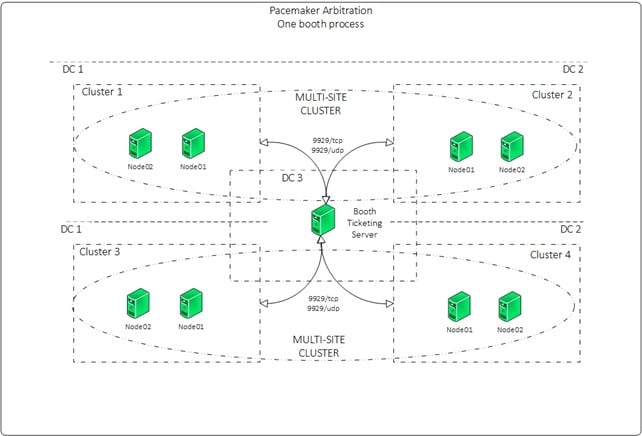

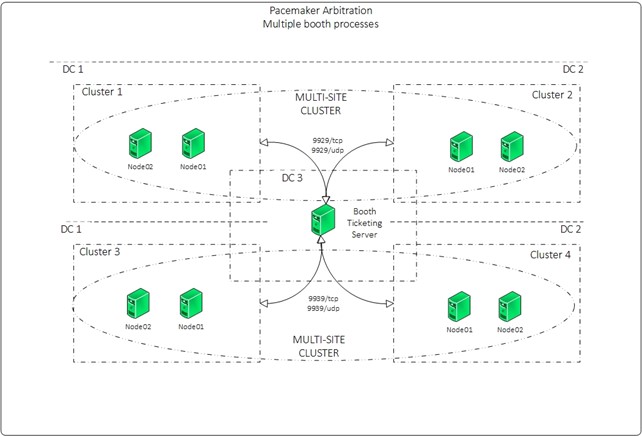

Firstly, what we need to do is to create a booth configuration on the existing clusters. Let’s assume you have two sets of clusters in multi-site topology:

- Two, two-node clusters in multi-site topology providing HA/DR for the application mq

- Two, two-node clusters in multi-site topology providing HA/DR for the application web

Booth Server Configuration

- Install required packages:

[arbitrator]# dnf install pcs booth-core booth-arbitrator - If you are running the firewalld, add the service:

[arbitrator]# firewall-cmd --add-service=high-availability --permanent

[arbitrator]# firewall-cmd --reload - Or update the service to only include the booth related ports

[arbitrator]# firewall-cmd --reload

[arbitrator]# firewall-cmd --add-service=booth --permanent

[arbitrator]# firewall-cmd --reloadBooth Configuration on Cluster Nodes

- Set up a booth configuration on a single node within the cluster. Ensure that the addresses provided for each cluster and the arbitrator are IP addresses. For each cluster, use a floating IP address. Run the following command on cluster1.node1 of the cluster1 cluster. Executing this command will generate the configuration files /etc/booth/booth.conf and /etc/booth/booth.key on the node where the command is executed.

[cluster1.node1]# pcs booth setup sites <Floating IP cluster1> <Floating IP of cluster2> arbitrators <Arbitrator IP> - Create a ticket for the Booth configuration on the first node of the first cluster (cluster1.node1). This is the ticket that you will use to define the resource constraint that will allow resources to run only when this ticket has been granted to the cluster. This basic failover configuration procedure uses only one ticket, but you can create additional tickets for more complicated scenarios where each ticket is associated with a different resource or resources.

[cluster1.node1]# pcs booth ticket add mqticket - Synchronise the Booth configuration to all nodes in cluster1. Note that this command will copy the booth.conf and booth.key to /etc/booth/ directory on all the nodes of the cluster1.

[cluster1.node1]# pcs booth sync - You have two options to copy the configuration files to another cluster (cluster2). Either authenticate the cluster2.node1 to the cluster1.node1 and pull the configuration using the following commands:

[cluster2.node1]# pcs host auth cluster1.node1

[cluster2.node1]# pcs booth pull cluster1.node1

[cluster2.node1]# pcs booth sync - You can also copy the /etc/booth/booth.conf and /etc/booth/booth.key to cluster2.node1 (ensure to keep the same permissions) and the following command to copy the config to all the nodes in cluster2.

[cluster2.node1]# pcs booth sync - Continue with the boot configuration, configuring the booth as a cluster resource. This process requires the Floating IP assigned to each cluster. The following commands will create a resource group with booth-ip and booth-service as member of the group:

[cluster1.node1] # pcs booth create ip <Floating IP cluster1>

[cluster2.node1] # pcs booth create ip <Floating IP cluster2> - Add a ticket constraint to the resource groups defined for each cluster

[cluster1.node1] # pcs constraint ticket add mqticket mq_app_rg

[cluster2.node1] # pcs constraint ticket add mqticket mq_app_rg - Repeat all the above steps for cluster3 and cluster4, remembering that we are creating webticket and adding the ticket to the web_app_rg.

Update the configuration on the booth server

- Copy the /etc/booth/booth.conf and /etc/booth/booth.key from cluster1 and cluster 3 to the arbitrator server to /etc/booth

- Save the booth.conf from the mq cluster as mqcluster.conf

- Save the booth.key from the mq cluster as mqbooth.key

- Save the booth.conf from the web cluster as webcluster.conf

- Save the booth.key from the web cluster as webbooth.key

- Update the configuration for the mqcluster.conf as follows:

port = 9929 # Default booth port

transport = UDP # You can use either UDP or TCP ports

site = 192.168.201.100

site = 192.168.202.100

arbitrator = 192.168.50.151

authfile = /etc/booth/mqbooth.key

ticket = "mqticket" - Update the configuration for the webcluster.conf as follows:

port = 9939

transport = UDP

site = 192.168.203.100

site = 192.168.204.100

arbitrator = 192.168.50.151

authfile = /etc/booth/webbooth.key

ticket = "webticket" - If you are running SELinux on the arbitrator (as you should), you need to add a new port to the configuration:

[arbitrator]# semanage port -a -t boothd_port_t -p tcp 9939

[arbitrator]# semanage port -a -t boothd_port_t -p udp 9939 - Verify the SELinux configuration:

[arbitrator]# semanage port -l | grep boothd

boothd_port_t tcp 9939, 9929

boothd_port_t udp 9939, 9929 - Start the boothd services, where the part following ‘@’ should be the name of the configuration file without ‘.conf’

[arbitrator]# systemctl enable –-now booth@mqcluster.service

[arbitrator]# systemctl enable –-now booth@webcluster.service - Verify if the booth service is running:

[arbitrator]# systemctl status booth@mqcluster.service

[arbitrator]# systemctl status booth@webcluster.service - List the tickets for the given cluster:

[arbitrator]# booth list -c /etc/booth/mqcluster.conf

ticket: mqticket, leader: NONE

[arbitrator]# booth list -c /etc/booth/webcluster.conf

ticket: webticket, leader: NONE The following diagram is our final configuration:

Final Notes

The above Booth configuration sets up automatic site failover, where the Booth arbitrator monitors the clusters. If communication with one of the clusters is lost, the arbitrator allows another cluster to take over the ticket and enable resources.

For manual failover, the Booth arbitrator is not required, as the clusters continuously exchange information with each other. To configure manual failover, modify the booth.conf configuration file on each cluster as follows:

port = 9929

transport = UDP

site = 192.168.201.100

site = 192.168.202.100

arbitrator = 192.168.50.151

authfile = /etc/booth/mqbooth.key

ticket = "mqticket"

mode = MANUAL Refer to the following links for more information:

- https://documentation.suse.com/sle-ha/15-SP6/html/SLE-HA-all/cha-ha-geo-booth.html

- https://github.com/ClusterLabs/booth/blob/main/docs/boothd.8.txt

If you have questions or need assistance with your booth configuration, feel free to browse our Insentra Insights or reach out to us for a consultation.